Introduction

This is the fourth in a series of articles exploring the Gleam programming language. The first article explored some of the most basic features of Gleam; just enough to say hello. The second discussed looping constructs, namely that gleam doesn’t have them. The third was supposed to be about parallel programming and OTP, but it ended up being more about looping and recursion.

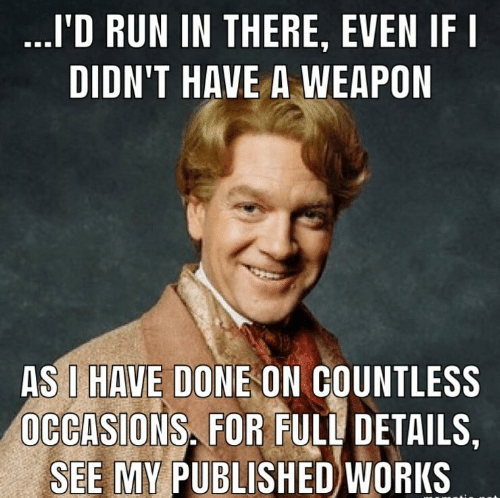

So this one is about parallel programming and OTP. Every other programming text I’ve written leaves concurrency until quite late because most programming languages make concurrency hard to get right (My will-never-be-published book on Rust took very serious issue with their “fearless concurrency” claims).

However, Gleam is backed by ERLang and ErLang is famous for two things: a completely different paradigm from anything anywhere else, and concurrency that just works. Maybe the two go hand in hand… I never did learn ERLang. The syntax is just too ugly, and I am a man of refined tastes (he says while reaching into a huge bag of no-name branded potato chips).

Patreon

If you find my content valuable, please do consider sponsoring me on Patreon account. I write about what interests me, and I usually target tutorial style articles for intermediate to advanced developers in a variety of languages.

I’m not sure how far I’m going to go with this series on Gleam, so if you’re particularly interested in the topic, a few dollars a month definitely gives me a bit of motivation to keep it up. It’s not that it makes a difference in my life (I’m a salaried programmer, after all), but I’ve found the tangible appreciation keeps me invested.

An Evil Parallel Problem

Let’s try to crack some passwords. Ok, let’s not. It’s a bit disturbing that this was the first highly parallel problem to come to mind. Let me be clear up front: I have no real-world experience with hacking passwords.

We all know (I hope) that passwords should not be stored in a database in plain text. If the database gets hacked, then every user’s password is immediately exposed. And users are notoriously bad at not reusing passwords, so that means the hackers have access to the user’s e-mail, which means they have access to reset password flows…. eek. No plaintext passwords, please!

Then again, don’t get me started on how much I hate multi-factor authentication. I mean, I know it’s necessary, but my god what an inconvenience. Look. You went and got me started.

Ahem. Right. Hacking.

Instead, the password should be hashed using a “one-way hash” function: one that can easily convert a piece of data to another piece of data, but not the inverse.

Normally, we also “salt” the password. Salting is just adding some random data to the password so the hash can’t be calculated deterministically by a brute-force attacker such as ourselves. Unfortunately, the system we are decidedly not trying to hack today did not use salting. The system also tried to make our lives (as the hackers we are not) easier by enforcing rules such as minimum and maximum password lengths.

Did you know that it’s technically easier to brute force attack passwords on sites that ask you to include a mixture of uppercase and lowercase letters, numbers, and punctuation in every password? All of those rules reduce the total number of potential passwords that we need to test. If you maintain such a website, update your password policy.

For this example, the site’s rules are very simple and very stupid:

- Password must be 6 characters long

- Password must have all lower-case letters

It’s like it’s begging to be hacked, right? Somebody please warn these site administers that we’re going to hack their password policy in under five minutes.

SHA256

In addition to failing to salt passwords and having a stupid password policy, the imaginary system (I swear it is imaginary) we are trying to hack made the mistake of using SHA256 to hash the passwords. The reason this is dumb is that it is super fast to calculate SHA256. That means we can calculate far more of them per unit of time than if we used something saner such as PBKDF2.

Actually, the real reason I chose SHA256 is that it was easy to find a library

that calculates SHA256 for gleam. The

gleam_crypto gives us

access to calculate SHA256 sums. So let’s add that using gleam add gleam_crypto. Presumably this library will grow over time to include other

common hashing algorithms including the aforementioned PBKDF2 and the Blake2

and Blake3 algorithms popular in the blockchain world.

The gleam_crypto package works with binary data (called bit_strings in

Gleam) instead of textual data (which is unicode). So let’s start our nefarious

plot by pretending that we are (well-meaning, but naive) good guys. Here’s a

program that, given a string command line argument, converts it to a

bit_string:

import gleam/bit_string

import gleam/erlang

import gleam/io

import gleam/list

import gleam/result

pub fn main() {

assert Ok(password) =

erlang.start_arguments()

|> list.at(0)

|> result.map(bit_string.from_string)

io.debug(password)

}

This example uses the same start_arguments and list.at functions that we saw in Part

1 to extract a command line argument. Recall that list.at returns a Result

type. We don’t actually want a string result, though; We want a bit_string.

So we pipe the output of list.at into the result.map function. That

function applies an arbitrary function to whatever is “inside” the Result and

returns a new Result containing the output of that function.

In this case, said function is bit_string.from_string, which converts the

string to binary data in UTF-8 encoding. So now we have a Result(bit_string)

and we use assert to extract the value from the result, shamelessly crashing

if no argument was passed.

Then we use io.debug to print the value to the screen. This function is much

like io.print with a twist: The argument does not have to be a string. So

it’s useful for quickly getting the “raw” (Erlang) representation of a

variable without having to find a function to convert it to a string. If we

run this program with gleam run passwd (I chose passwd as the

password. It’s not a very good password, but it satisfies the bad rule of 6

all lowercase letters).

The output is:

❯ gleam run passwd

Compiling hello_world

Compiled in 0.47s

Running hello_world.main

<<"passwd">>

<< and >> are Erlang’s way of saying this is binary data. In this case,

since all the data is ASCII characters, it just puts the string between those

delimiters. If they were not ASCII, the bytes would be a bunch of integers.

Let’s now import gleam/crypto and calculate the hash of that password:

crypto.hash(crypto.Sha256, password) |> io.debug

This one line example demonstrates why we need to be especially careful of

how we design APIs in Gleam. In my opinion, the hash function should accept

the password bitstring as the first argument. Then I could have piped the

password in as part of the original pipeline.

The result of a hash call is a bitstring, but it isn’t restricted to unicode

bytes. So the debug call in this case outputs the bitstring as a sequence of

integers:

❯ gleam run password

Compiling hello_world

Compiled in 0.48s

Running hello_world.main

<<94,136,72,152,218,40,4,113,81,208,229,111,141,198,41,39,115,96,61,13,106,171,

189,214,42,17,239,114,29,21,66,216>>

Notice how each integer is lower than 256. That’s because they represent the individual bytes (8 bits and 2 to the power of 8 is 256) of the string.

Note: It is normal to represent sha256 values using hexadecimal strings, but I can’t find any gleam libraries that convert bit_strings to hex. Maybe we can revisit this when we explore externals in a later article.

Generating hash candidates

Ok, let’s put on our Black Hat. We know how to convert a string to its Sha256 encoded representation. Let’s imagine that value was stolen from a database somewhere. How do we convert the sha256 back to a string?

Simple: We iterate through all the known strings that match the requirements and see which one generates that hash. Sound monotonous? You betcha it is. Luckily, you don’t have to type all those strings into a web form one at a time. Computers are really good at doing monotonous things with binary data.

Further, computers are equally good at doing monotonous things in parallel, so long as the programming environment isn’t artificially restricting you from accessing multiple CPUs.

Gleam / Erlang does not have such restrictions. So, that’s pretty sweet. We’ll get to the parallel part in a bit, but let’s first generate all those strings.

Let’s talk about modules

Lucky for us, somebody recently wrote about generating permutations of strings in Gleam.

Most languages have modules to break your code into separate namespaces, sometimes with privacy rules and sometimes without. Gleam is no exception.

Gleam uses the fairly common paradigm of “folder/filename is the module name”.

We need the letters(), permutations_recursive(), and permutations from

Part 3 of this series. Let’s copy them into a new file in our src folder

named permute_strings.gleam:

// src/permute_strings.gleam

import gleam/iterator

import gleam/string_builder

const letters_list = [

"a", "b", "c", "d", "e", "f", "g", "h", "i", "j", "k", "l", "m", "n", "o", "p",

"q", "r", "s", "t", "u", "v", "w", "x", "y", "z",

]

fn letters() -> iterator.Iterator(string_builder.StringBuilder) {

letters_list

|> iterator.from_list

|> iterator.map(string_builder.from_string)

}

fn permutations_recursive(

tails: iterator.Iterator(string_builder.StringBuilder),

count: Int,

) -> iterator.Iterator(string_builder.StringBuilder) {

case count {

1 -> tails

n ->

letters()

|> iterator.flat_map(fn(letter) {

tails

|> iterator.map(fn(tail) { string_builder.append_builder(letter, tail) })

})

|> permutations_recursive(n - 1)

}

}

pub fn permutations(

count: Int,

) -> Result(iterator.Iterator(string_builder.StringBuilder), String) {

case count {

x if x < 0 -> Error("count must be non-negative")

0 -> Ok(iterator.empty())

n -> Ok(permutations_recursive(letters(), n))

}

}

Then we can extend our main function in the main project file to calculate

a few permutations and output them to the screen:

// <other imports snipped>

import permute_strings

pub fn main() {

assert Ok(password) =

erlang.start_arguments()

|> list.at(0)

|> result.map(bit_string.from_string)

crypto.hash(crypto.Sha256, password)

|> io.debug

assert Ok(candidates) = permute_strings.permutations(6)

candidates

|> iterator.map(string_builder.to_string)

|> iterator.take(10)

|> iterator.map(io.println)

|> iterator.run

}

In words, the new pipeline does the following:

- Get an iterator over

StringBuilderobjects from our new module. - Convert the

StringBuildertoStringusingiterator.map(Arguably, this should be done inside thepermutationsfunction. - take just the first 10 elements from the iterator (to avoid epic terminal scrollyness)

- print the elements

- run the iterator

You’ll note that this returns instantly. This is because iterators are “lazy”.

Nothing gets evaluated until you hit the run statement, and by this point,

you’ve already cut the list down to just 10 values (take(10)). None of the

other candidates ever get generated. If you want to bore yourself, try to get

the number of values in this list:

assert Ok(candidates) = permute_strings.permutations(6)

candidates |> iterator.to_list |> list.length |> io.debug

Then run gleam run passwd. Keep reading while you wait, cause you will

get bored. People from imperative languages tend to think of length as a

constant time operator. This is because the ArrayList type typically used in

those languages stores its own length as part of its normal record keeping, so

it’s just one memory access to look it up.

However, functional languages tend to be more linked list oriented. This

changes how you have to think about… well, pretty much everything, come to

think of it. In this case, it means that length has to traverse every link in

the list to calculate the total, so it runs in O(n) (linear) time. If you

haven’t studied algorithmic complexity, then 1) study it, and 2) linear time

means the more elements the more time it takes.

Go ahead and press Ctrl-C. I know it hasn’t completed yet.

So, wow. It takes a lot longer to iterate all those strings than I expected. I’m actually a bit puzzled because there should “only” be about 300 million distinct 6 element strings (26 ** 6). A third of a billion doesn’t seem like that much to ask for on a machine with 3 billion Hz *per CPU*. Granted, this code is only using one CPU because we haven’t made it parallel, but still…

Anyway, since permutations(6) is currently too unweildy, I timed

permutations(5) to calculate the < 12 million possible combinations of 5

letters and it took at least (smallest of five runs) 13.54 seconds on my

machine. This is manageable for optimizing, so let’s work with it. For another

benchmark, I also timed an unoptimized Python program to calculate the same

thing and it took over 40 seconds. So Gleam isn’t doing so bad after all. (The

python code is a simple triple-nested loop, much easier to code and reason

about than our tail recursive Gleam code).

Now, I heard that in Erlang, lists are super highly performant and maybe even preferred to iterators unless memory is an issue. Coming from a world where iterators reign supreme, I’m pretty skeptical, but let’s try converting the iterators to lists. It will make the code easier to read if nothing else!

import gleam/string_builder

import gleam/list

const letters_list = [

"a", "b", "c", "d", "e", "f", "g", "h", "i", "j", "k", "l", "m", "n", "o", "p",

"q", "r", "s", "t", "u", "v", "w", "x", "y", "z",

]

fn letters() -> List(string_builder.StringBuilder) {

letters_list

|> list.map(string_builder.from_string)

}

fn permutations_recursive(

tails: List(string_builder.StringBuilder),

count: Int,

) -> List(string_builder.StringBuilder) {

case count {

1 -> tails

n ->

letters()

|> list.flat_map(fn(letter) {

tails

|> list.map(fn(tail) { string_builder.append_builder(letter, tail) })

})

|> permutations_recursive(n - 1)

}

}

pub fn permutations(count: Int) -> Result(List(String), String) {

case count {

x if x < 0 -> Error("count must be non-negative")

0 -> Ok([])

n ->

Ok(

permutations_recursive(letters(), n)

|> list.map(string_builder.to_string),

)

}

}

This is little more than a find and replace on the previous code, swapping out

iterator.Iterator in favour of List. At 11.70 seconds, the results support

the theory that lists are more efficient than iterators. But that’s still going

to be awfully high if we want to process six (or more) characters instead. This

isn’t unexpected; it’s an exponential problem. Every time we increment the

number of characters in a password, we’re multiplying the number of items we

have to process by 26. So the total amount of time is

26n is the number of characters.

Our attack is called “brute force” for a reason. But we need to make better use of our resources.

Introducing OTP

I’ve got 10 CPUs. Can I calculte new tails on all of them? A load average of

1000% sounds much better than 100% (if you like heating your home with CPUs,

that is). Our current permutations_recursive implementation doesn’t look

terribly easy to convert to parallel, but it’s not actually that bad, at least

if we want to do it poorly. For each letter of the alphabet, we have to create

a list of all the new tails that have that letter prepended to all the old

tails. Can we do each of the letters in parallel? That would mean that each of

my CPUs gets to handle about two letters (plus a bit).

Let’s first simplify our problem. Instead of trying to generate a list of all

the possible candidate, let’s generate a count of them. (Yes, I know this would

be easy to math as 26 to the power of 5, but stick with me). In other words,

let’s remimplement length:

pub fn count_permutations(count: Int) -> Result(Int, String) {

case count {

x if x < 0 -> Error("count must be non-negative")

0 -> Ok(0)

n ->

Ok(

permutations_recursive(letters(), n)

|> list.map(string_builder.to_string)

|> list.length

)

}

}

Well that wasn’t very educational, was it? But we now have the boilerplate to

introduce some parallelism! Here’s the plan: We’ll extend this function to

create 26 jobs that run in parallel, and get the permutations_recursive for

one count less. Then we sum the lengths. Ready?

We’ll be using OTP, the famous Erlang Open Telecom Platform for this. It doesn’t have much to do with telecommunications anymore, but that’s what it means. If you thought it meant “One Time Passcode”, that was my first guess too.

Gleam has a very elegant soundly typed interface to OTP, and we’ll use it.

Of course, that means installing a package:gleam add gleam_otp. You’ll also

need to import it in the permute_strings file: import gleam/otp/process.

The basic unit of concurrency in Gleam is called a process. Don’t confuse them with operating system processes (such as used by JS worker “threads” or the python multiprocessing module). They just reused the name to confuse us.

Don’t confuse them with operating system threads, either. A Gleam/Erlang process is a sort of green thread/coroutine/goroutine. They are, according to the docs, extremely lightweight and it’s common to have tens of thousands of the little devils in a working app. We’re just going to use 26 for now (Baby steps).

Communicate Over Channels

Gleam’s other basic unit of concurrency is a channel. A channel is just two ends of a data pipe; you can send data from one process into the channel, and another process can wait for that data at the other end.

So we need 26 channels. Let’s create them by piping our letters through

list.map(). Expand the recursive n -> arm in count_permutations

to the following:

n -> {

let receivers = letters() |> list.map(fn(letter) {

let #(sender, receiver) = process.new_channel()

receiver

})

Ok(-99)

}

Ignore the Ok(-99). I just put that there so Gleam would compile it. The

function has to return what it says it’s going to return after all. Otherwise,

what’s the point of sound typing?

We’re calling process.new_channel() once for each letter of the alphabet.

This returns a tuple of two values. I can’t remember if we’ve encountered

tuples before, so if we haven’t: A Gleam tuple is a way to collect multiple

values together. It is written by enclosing the values (there can be any

number, but in this case, there are two) in parentheses prefixed with a #.

Here, rather than creating a tuple, we are doing the inverse. We destructure

the tuple into two separate variables using pattern matching. One gets ignored

(for now), and the other is returned from map. So receivers is now a list

of 26 process.receiver objects.

Of course the channels aren’t very useful if all they do is exist! So let’s

send something into the channel for each of those senders, and then read it

from the receiver after they’re all sent. We use the obviously named

process.send function (from the otp module) to send a value over the

channel and the slightly less obviously named process.receive_forever

function to receive that value at the other end.

The forever in the name means that the process will wait indefinitely for a

value to show up. In normal programming, especially network programming, you’d

be using the receive function, which accepts a timeout value and returns a

Result with an error if it timed out.

For now, let’s send the (arbitrary) value 5 for each of our letters.

Remember, we’re collecting all the receivers into a list, and we can do normal

list operations on it, such as putting receive_forever inside a map call to

extract all the values:

n -> {

let receivers =

letters()

|> list.map(fn(letter) {

let #(sender, receiver) = process.new_channel()

process.send(sender, 5)

receiver

})

Ok(

receivers

|> list.map(process.receive_forever)

|> list.fold(0, fn(acc, x) { acc + x }),

)

}

The list.fold at the end is summing up all the 5s we received so we can

return an Int from the function like our signature promises. My main function

currently looks like this (actually it contains a bunch of commented code from

my various failed tests and experiments, but you can pretend it looks like

this):

import gleam/io

import permute_strings

pub fn main() {

assert Ok(candidates) = permute_strings.count_permutations(5)

candidates

|> io.debug

}

The output when I run this program is 130. Uncoincidentally, so is 26 * 5.

Going Parallel

Though we are using the process module, the above code is still 100% serial.

Each letter is processed one after the other. The process.start function is

where the magic of paralleling happens. (Paralleling is a word now).

The important thing about process.start is that it returns immediately, no

matter how long the enclosing function takes to run. That means the next letter

can have its processing started without waiting for the first one to

complete.

It accepts a single function as an argument. Because the called function doesn’t accept any arguments, you typically need to call it as an anonymous function that has access to enclused variables. Here’s how it looks:

n -> {

let receivers =

letters()

|> list.map(fn(letter) {

let #(sender, receiver) = process.new_channel()

process.start(fn() { process.send(sender, 5) })

receiver

})

Ok(

receivers

|> list.map(process.receive_forever)

|> list.fold(0, fn(acc, x) { acc + x }),

)

}

The callback function has access to the sender variable from the enclosing

scope. Now the sends are truly happening in parallel. Since returning a single

number takes no time at all, you probably won’t notice a difference. But we can

prove parallelism by doing something more expensive: actually calculating the

permutations for a given letter:

process.start(fn() {

let count =

permutations_recursive(letters(), n - 1)

|> list.length

process.send(sender, count)

})

For each letter, we call the permutations_recursive function with a lower

number. We passed 5 to count_permutations, so now we’re passing 4 to

permutations_recursive 26 separate times. Run this with time gleam run

and bask in the glory of 10x CPU usage!

Compiled in 0.54s

Running hello_world.main

11881376

gleam run 10.75s user 4.61s system 904% cpu 1.699 total

I should probably figure out how to build a gleam package so that the compile

time isn’t included in the time measurement, but whatever. Do the math. 10.75

seconds worth of work was done in 1.199 real seconds by using 904% of a CPU.

Seems I’m still wasting one CPU somewhere, but I’m not going to get too

agitated about it. More importantly, we can now calculate permutations of 6

letters in an achievable amount of time:

Compiled in 0.50s

Running hello_world.main

308915776

gleam run 333.07s user 210.39s system 1004% cpu 54.114 total

Counting Doesn’t Count. Let’s Hash

Counting things isn’t really that much work, though. Let’s hash the strings

while we’re at it. Copy and paste the count_permutations function and make

it return a Result(String, String). Shortly we’ll make it return the string

it found if there is one, but for now let’s just do the hashing work and return

the letter that was processed as a placeholder:

pub fn hash_permutations(count: Int) -> Result(String, String) {

case count {

x if x < 0 -> Error("count must be non-negative")

0 -> Error("No Match")

n -> {

let receivers =

letters()

|> list.map(fn(letter) {

let #(sender, receiver) = process.new_channel()

process.start(fn() {

let candidate =

permutations_recursive(letters(), n - 1)

|> list.map(fn(s) {

let hashable =

string_builder.append_builder(letter, s)

|> string_builder.to_string

|> bit_string.from_string

crypto.hash(crypto.Sha256, hashable)

})

process.send(sender, letter)

})

receiver

})

Ok(

receivers

|> list.map(process.receive_forever)

|> list.fold(

"",

fn(acc, x) { string.append(acc, string_builder.to_string(x)) },

),

)

}

}

}

This function brings in a whole host of imports, so here they are for your copy-paste pleasure rather than painfully tracking them down one by one:

import gleam/string_builder

import gleam/list

import gleam/otp/process

import gleam/crypto

import gleam/bit_string

import gleam/io

import gleam/string

This function all looks identical to the count_permutations version until the

let candidate = line, where all hell breaks loose. It first prepends the

letter to the candidate string_builder, then converts the result to a

string, then converts THAT to a bit_string then uses the crypto.hash

function we saw at the start of this article (remember that? I don’t. I wrote

it like a month ago…) to calculate the hash.

For now, we just send the letter out and then our aggregation function appends them. Running it outputs the 26 letters of the alphabet; one for each process we started.

It takes two minutes to hash all 26 * 6 letter permutations, but I’m a patient hacker. I can live with that. (It’s working my CPUs so hard that the fan kicked in and my text editor started to jitter).

Comparing The Hash

Calculating the hashes isn’t that much fun unless we can actually find the

one that matches. Let’s revise the function a bit to accept the target hash and

return the string if it matches. This will intorduce us to some fun things in

the result and option modules. Let’s add imports for them and learn a variation

on the import syntax while we’re at it:

import gleam/result

import gleam/option.{None, Some}

That second import is how we import a value directly into our namespace. It

means we can write None and Some (more on those later) instead of

option.None and option.Some in our code.

Now, update the function signature to accept a target:

pub fn hash_permutations(

target: BitString,

count: Int,

) -> Result(String, String) {

...

Then modify the function run inside the process with this (rather hairy) pipeline:

process.start(fn() {

let found =

permutations_recursive(letters(), n - 1)

|> list.map(fn(s) {

let candidate =

string_builder.append_builder(letter, s)

|> string_builder.to_string

let hashed_candidate =

crypto.hash(

crypto.Sha256,

candidate

|> bit_string.from_string,

)

case hashed_candidate {

t if t == target -> Some(candidate)

_ -> option.None

}

})

process.send(sender, found)

})

This code is admittedly pretty ugly. Part of the problem is that both

crypto.hash and process.send accept their arguments in what I consider to

be the “wrong” order, so you can’t easily pipe values into them. Part of it is

probbaly also that I just don’t write very good Gleam code yet. And part of it

is that we’re using the low level OTP API instead of more elegant models that

are built on top of it (there is another article in the queue, don’t worry).

We are using the option.Option type, which is similar to Result except that

rather than indicating an error condition, it indicates an optional value. The

value can either be Something or None. In this case, we are saying it is

Some if the hash we just tested matches the target hash that was passed in.

Otherwise it is None.

All those Options get sent out to the receiver to be aggregated in the list of

receivers, which we now have to process as follows:

receivers

|> list.flat_map(process.receive_forever)

|> list.find_map(fn (opt) {option.to_result(opt,Nil)})

|> result.replace_error("No Match")

We flat_map the values to process.receive_forever as we did before. Then we

use the list.find_map function, which is used to simultaneously find the

first value that matches some condition, and apply a function to convert it to

a different form. It’s like calling find and map one after the other. The

function passed into find_map needs to return a Result to indicate if it is

a found value or not. In this case, that function uses option.to_result to create

an ok Result if the value was found, or an Error result if not.

The find_map function always returns Error(Nil) if no match is found,

regardless of what the inside Error type was. But I want to return a String

error so the user can distinguish “No Match” Results from “count must be

non-negative” results. That’s what the replace_error function does. If it’s

an ok result, nothing happens; otherwise, we replace it with something that

works for us.

Now you can use the following main function to hack 6 character passwords in

a few minutes:

import gleam/io

import gleam/list

import gleam/erlang

import permute_strings

import gleam/result

import gleam/bit_string

import gleam/crypto

import gleam/string

pub fn main() {

assert Ok(password) =

erlang.start_arguments()

|> list.at(0)

|> result.map(bit_string.from_string)

let target_hash = crypto.hash(crypto.Sha256, password)

case permute_strings.hash_permutations(target_hash, 6) {

Ok(plaintext) -> io.println(string.append("Found ", plaintext))

Error(message) -> io.println(message)

}

}

Just pass the password in as a CLI argument and let the hacking begin.

It took 2 minutes and 22 seconds to find passwd.

Summary

This article was huge. Wore my fingers out, it did. Will spend the rest of the day doing custom stretchs I invented to keep RSI at bay. I hope you find value in it. I didn’t even proofread it (thanks to Brett Cannon for finding a dozen typos).

This was purportedly an introduction to OTP, but honestly, parallelism was the easiest part of this article. Channels and processes, what more do you need? We also learned how to create modules and import from them. You know what tuples look like and how to destructure them, and became more familiar with the List API.

At least, I hope you did. I know I did. If nothing else, I hope you learned to use long, unique passwords, salt your hashes, enable 2-fac, and disable password policies that artificially limit the search space.